Financial technology often referred to as Fintech, and sustainability are two of the biggest influences transforming many organizations. However, not all organizations move forward on both with the same enthusiasm. Leaders in Fintech do not always prioritize operating in a sustainable way. It is, therefore, important to find the synergies between Fintech and sustainability.

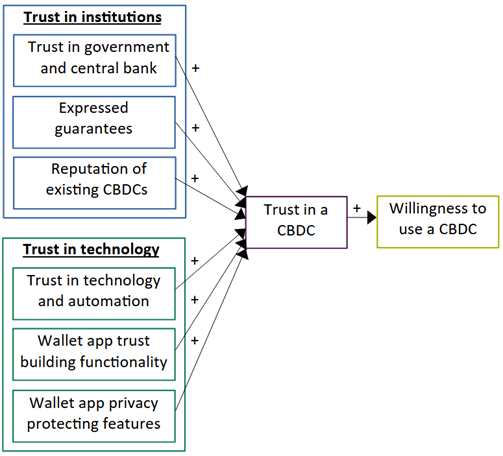

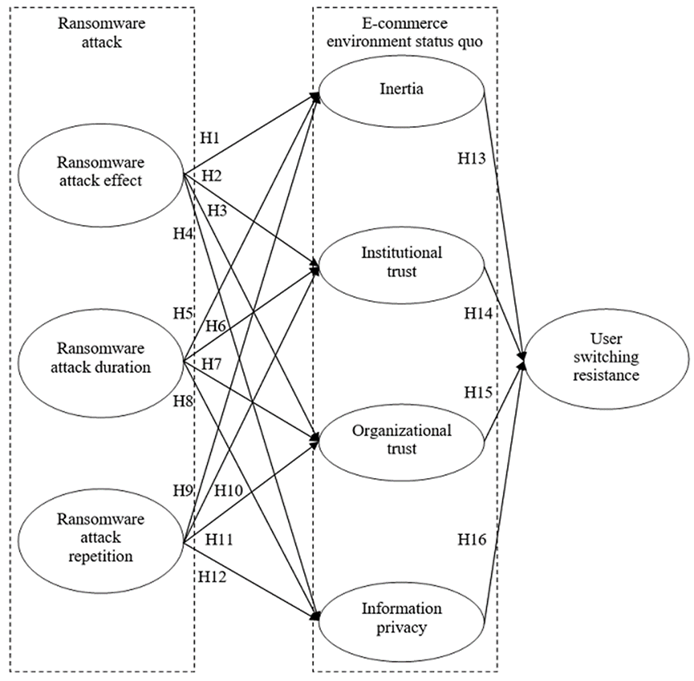

One important aspect of this transformation many organizations are going through is the consumersʹ perspective, particularly the trust they have, their personal information privacy concerns, and the vulnerability they feel. It is important to clarify whether leadership in Fintech, with leadership in sustainability, is more beneficial than leadership in Fintech on its own.

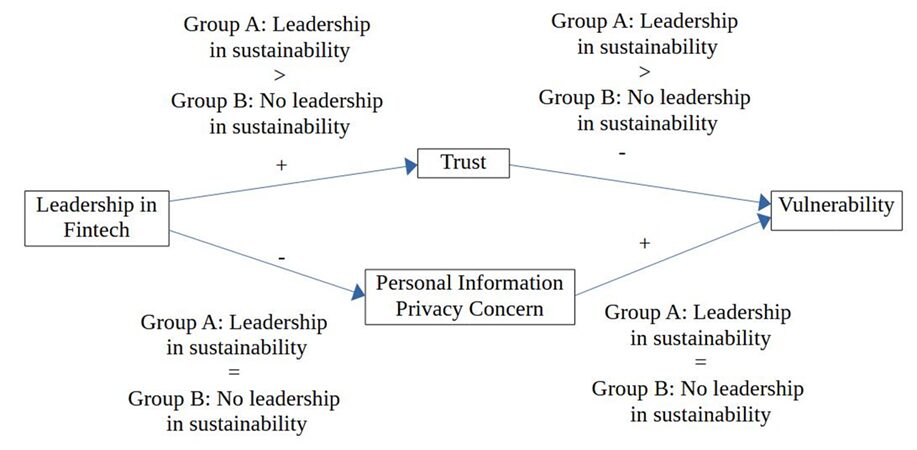

This research evaluates consumers’ trust, privacy concerns, and vulnerability in the two scenarios separately and then compares them. Firstly, this research seeks to validate whether leadership in Fintech influences trust in Fintech, concerns about the privacy of personal information when using Fintech, and the feeling of vulnerability when using Fintech. It then compares trust, privacy concerns and vulnerability in two scenarios, one with leadership in both Fintech and sustainability, and one with leadership just in Fintech without sustainability.

Figure 1. Leadership in Fintech, trust, privacy and vulnerability, with and without sustainability

The findings show that, as expected, leadership in both Fintech and sustainability builds trust more, which in turn reduces vulnerability more. Privacy concerns are lower when sustainability leadership and Fintech leadership come together; however, their combined impact was not found to be sufficiently statistically significant. So contrary to what was expected, privacy concerns are not reduced more effectively when there is leadership in both together.

The findings support the link between sustainability in the processes of a Fintech and being successful. While the limited research looking at Fintech and sustainability find support for the link between them by taking a ‘top‐down’ approach and evaluating Fintech companies against benchmarks such as economic value, this research takes a ‘bottom‐up’ approach by looking at how Fintech services are received by consumers.

An important practical implication of this research is that even when there is sufficient trust to adopt and use Fintech, the consumer often still feels a sense of vulnerability. This means the leaders in Fintech must not just think about how to do enough for the consumer to adopt their service, but they should go beyond that and try to build trust and reduce privacy concerns to the degree that the consumer’s belief that they are vulnerable is also reduced.

These findings can inform a Fintech’s business model and the services it offers consumers.

Reference

Zarifis A. (2024) ‘Leadership in Fintech builds trust and reduces vulnerability more when combined with leadership in sustainability’, Sustainability, 16, 5757, pp.1-13. https://doi.org/10.3390/su16135757 (open access)

Featured by FinTech Scotland: https://www.fintechscotland.com/leadership-in-fintech-builds-trust-and-reduces-vulnerability/