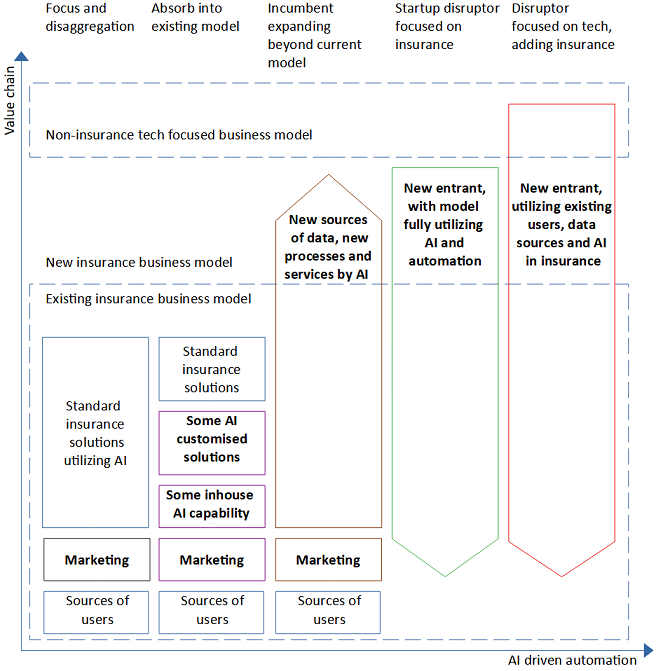

Artificial intelligence (AI) and related technologies are creating new opportunities and challenges for organizations across the insurance value chain. Incumbents are adopting AI-driven automation at different speeds, and new entrants are attempting to use AI to gain an advantage over the incumbents. This research explored four case studies of insurers’ digital transformation. The findings suggest that a technology focused perspective on insurance business models is necessary and that the transformation is at a stage where we can identify the prevailing approaches. The findings identify the prevailing five insurance business models that utilize AI for growth: (1) focus on a smaller part of the value chain and disaggregate, (2) absorb AI into the existing model without changing it, (3) incumbent expanding beyond existing model, (4) dedicated insurance disruptor, and (5) tech company disruptor adding insurance services to their existing portfolio of services (Zarifis & Cheng 2022).

In addition to the five business models illustrated in Figure 1, this research identified two useful avenues for further exploration: Firstly, many insurers combined the two first business models. For some products, often the simpler ones, such as car insurance, they focused and disaggregated. For other parts of their organization, they did not change their model, but they absorbed AI into their existing model. Secondly, new entrants can be separated into two distinct subgroups: (4) disruptor focused on insurance and (5) disruptor focused on tech but adding insurance.

Reference

Zarifis A., & Cheng X. (2022). AI Is Transforming Insurance With Five Emerging Business Models. In Encyclopedia of Data Science and Machine Learning (pp. 2086–2100). IGI Global. https://www.igi-global.com/chapter/ai-is-transforming-insurance-with-five-emerging-business-models/317609 (open access)