New research!

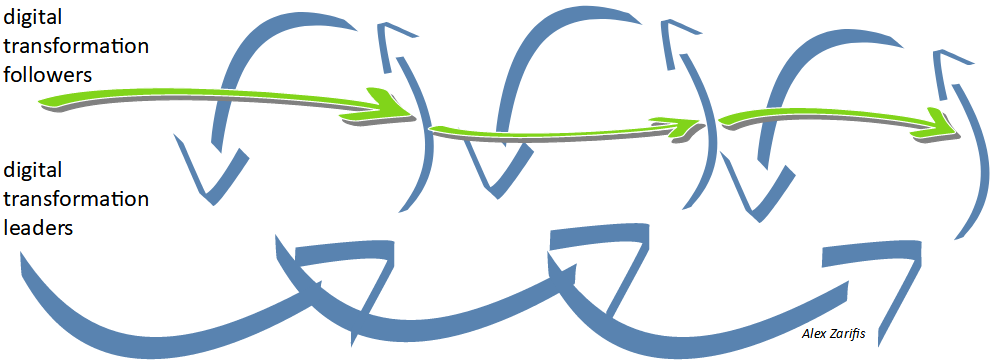

Fintech is changing the services to consumers, and their relationship with the organizations that offer them. This change is neither top-down nor bottom-up, but is being driven by many different stakeholders in many different parts of the world, making it hard to predict its final form. This research identifies five business models of Fintech that are ideal for AI adoption, growth and building trust (Zarifis & Cheng, 2023).

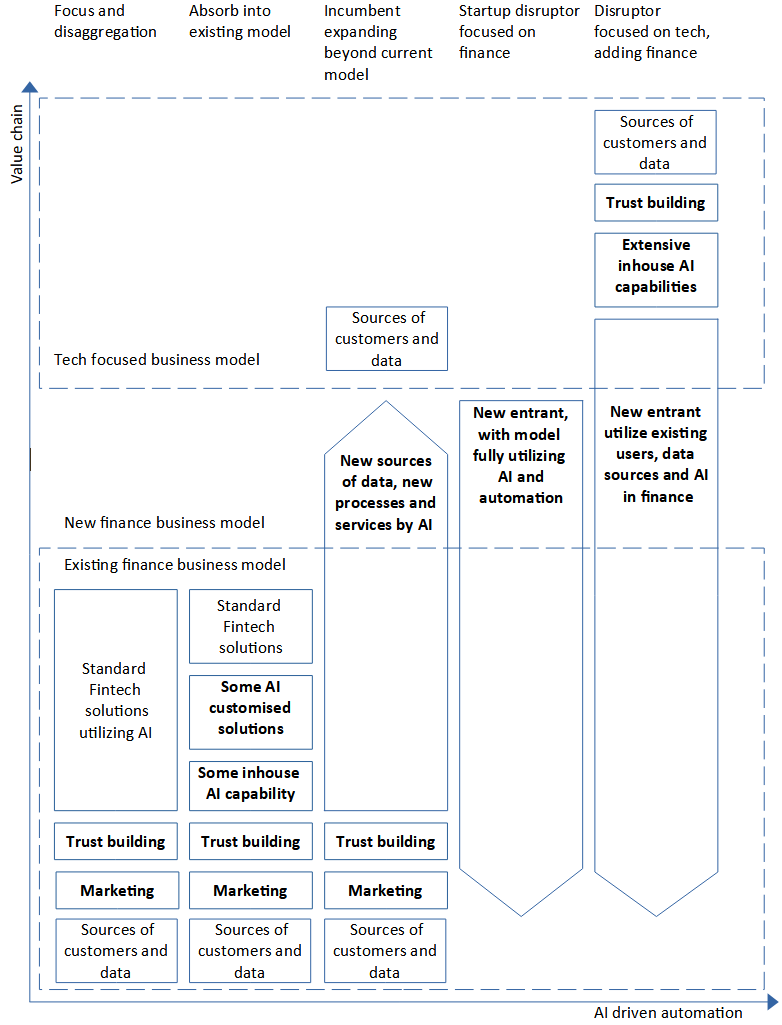

The five models of Fintech are (a) an existing financial organization disaggregating and focusing on one part of the supply chain, (b) an existing financial organization utilizing AI in the current processes without changing the business model, (c) an existing financial organization, an incumbent, extending their model to utilize AI and access new customers and data, (d) a startup finance disruptor only getting involved in finance, and finally (e) a tech company disruptor adding finance to their portfolio of services.

Figure 1. The five Fintech business models that are optimised for AI

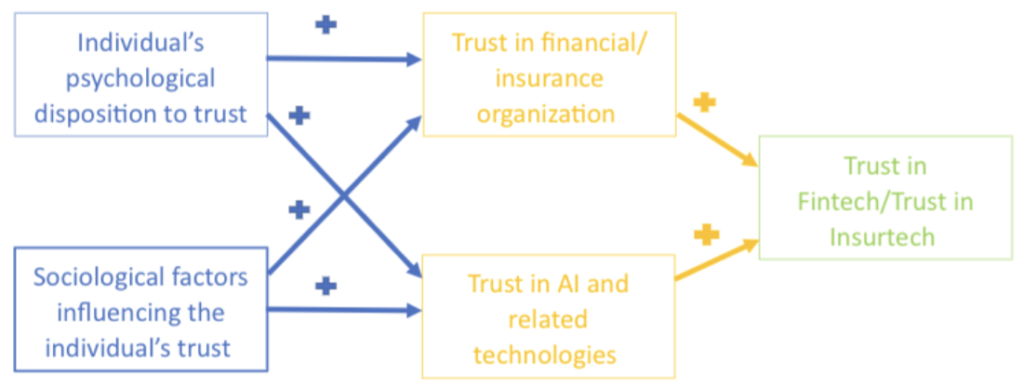

The five Fintech business models give an organization five proven routes to AI adoption and growth. Trust is not always built at the same point in the value chain, or by the same type of organization. The trust building should usually happen where the customers are attracted and on-boarded. This means that while a traditional financial organization must build trust in their financial services, a tech focused organization builds trust when the customers are attracted to other services.

This research also finds support that for all Fintech models the way trust is built, should be part of the business model. Trust is often not covered at the level of the business model and left to operation managers to handle, but for the complex ad-hoc relationships in Fintech ecosystems this should be resolved before Fintech companies start trying to interlink their processes.

Alex Zarifis

Reference

Zarifis A. & Cheng X. (2023) ‘The five emerging business models of Fintech for AI adoption, growth and building trust’. In Zarifis A., Ktoridou D., Efthymiou L. & Cheng X. (ed.) Business digital transformation: Selected cases from industry leaders, London: Palgrave Macmillan, pp.73-97. https://doi.org/10.1007/978-3-031-33665-2_4