Tim Berners-Lee, the creator of the world wide web, has released an important new book about the problems we face online and how to solve them. It is called This is for Everyone, meaning that the internet should be for all.

The philosophy espoused in the book is that the internet should not be a tool for the concentration of power among an elite. He wants the internet to function in a way that maximises the benefit to society.

His central idea, as he has written before, is that people should own their data. Personal data is any data that can be linked to us, such as our purchasing habits, health information and political opinions.

Everyone owning their data is a radically different approach to what we have today where big tech companies own most of it. This change is needed for two reasons.

The first is specifically about people’s right to privacy, so we don’t all feel like we live in a glass box with everything we do being monitored and having an effect on our careers and the prices we pay for services such as insurance. If AI is steered to make more money for an insurer it will do that, but it will not necessarily treat people fairly.

The second reason is that in a world being shaped by AI and data, if we do not own our data, we will have no power and no say in our future. For most of human history, workers’ labour was needed, and this gave them some power to pursue a fairer deal for themselves.

Most of us have the power to deny our valuable labour if we feel we are not treated fairly, but this may not have the same effect in the future. For many of us, in the highly automated AI driven world we are moving towards, our labour will not always be needed. Our data, however, will be very valuable, and if people own their data, they will still have a voice. When a tech giant owns our data, it holds all the cards.

None of these ideas are new, but as with the creation of the world wide web, Berners-Lee excels in bringing the best ideas together into one coherent, workable vision.

Many people have pet-hates about the internet, some dislike how algorithms sometimes promote controversial views, and others don’t like handing over more personal information for a service than what is necessary. His ability to see the bigger picture is due to the knowledge he has, having had a front row seat to the development of the world wide web from the start.

But what would this look like?

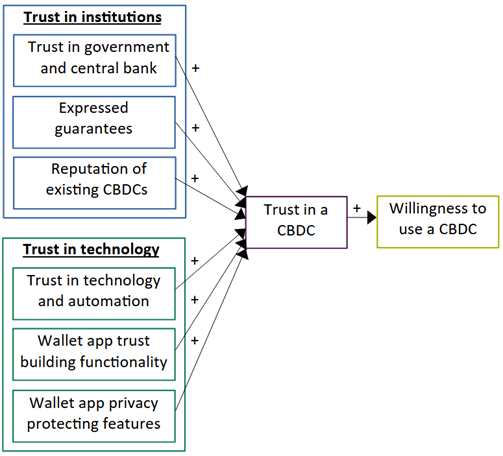

In practice, owning our data would mean having a data wallet app on our phone which internet companies might request access to. The internet companies could offer a small payment, or make their service free in exchange for the access. The individual could choose to manage access themselves on a case-by-case basis, or delegate the management of the data to a trusted third party such as a data union.

Berners-Lee recommends two possible solutions to break free from the oligopolistic situation we are in. The first is for government to intervene and create the regulation that would maximise the social good of the internet limiting the power of big tech.

This is highly unlikely in the United States where big tech is fully supported by the state. While a court in the US recently decided that Google had acted illegally to keep its monopoly status in search, it was not broken up under monopoly laws because it would be “messy”.

Elsewhere, though, for instance in the EU and Australia, there is a concerted effort to limit the negative outcomes for society of the internet. The EU constantly updates its general data protection regulation so that it offers some protection to citizens’ privacy, while in Australia a world-first social media ban has been passed for children under 16.

Berners-Lee’s vision would require governments to go further. He has repeatedly asked for governments to regulate big tech warning that failing to do so would lead to the internet being “weaponised at scale” to maximise profit not social good. The regulation would seek to broaden competition beyond a small number of giant tech companies.

Beyond state intervention, Berners-Lee presents other ways forward. Perhaps, he contends, people themselves can begin building better alternatives. For example, more people could use social media such as Mastodon.social that is decentralised and does not promote polarising views.

As he sees it, a key part of the problem is that we become tied into platforms run by the giants. Owning our data would go some way to having a fairer relationship. Instead of being locked into an increasingly small number of big tech firms this would open the door to new platforms offering a better deal.

Berners-Lee created the Open Data Institute that tries to bring agreement on new online standards. He is promoting what he calls socially linked data and co-founded Inrupt that offers an online wallet to store all our personal data. This could include our passport, qualifications, and information about our health.

This decentralised model would give people the ability to analyse their data locally within the wallet to gain insights on their finances and health, without giving their data away. They would have the option to share their data, but this would now be from a position of strength.

Access would be given to a specific organisation, to use specific personal data, for a specific purpose. AI, even more so than the internet, gives power to whoever has the data. If the data is shared, so will the power.

Unlikely, but you never know

Despite proposing solutions, his vision is the underdog here. The chances of it prevailing in the face of big tech power are limited. But it is a powerful message that better alternatives are possible. This message can motivate citizens and leaders to push for a fairer internet that maximises social good.

The future of the internet and the future of humanity are interwoven. Will we actively engage to shape the future we want, or will we be helpless passive consumers? The worsening or “enshitification” of services has become an almost inevitable part of the innovation cycle. Many of us now wonder when, not if the service we receive will start to degrade dramatically once we are locked in.

There is dissatisfaction but this has not yet led to people changing their habits, possibly because there have not been better alternatives. Berners-Lee made the world wide web a success because his solution was more decentralised than the alternatives. People are now seeing the results of the overcentralised internet, and they want to go back to those decentralised principles.

Berners-Lee has offered an alternative vision. To succeed it would need to support from both consumers and states. That may seem unlikely, but once, so did the idea that the world would be connected via a single online information sharing platform.

Article published in The Conversation, republished under Creative Commons licence.

Reference

Zarifis A. (2025) ‘Tim Berners-Lee wants everyone to own their own data – his plan needs state and consumer support to work’, The Conversation. Available from: https://doi.org/10.64628/AB.yq5sssjr3